If you are interested in Artificial Intelligence, chances are that you must have heard about Artificial Neural Networks (ANN), and Deep Neural Networks (DNN). This article is about ANN.

The boom in the field of artificial intelligence may have come recently, but the idea is old. The term AI was coined way back in 1956. Its revival though in the 21st century can be traced to 2012 when ImageNet challenge. Before this, AI was known as neural networks or expert systems.

At the foundation of AI are the networks of artificial neurons, the same as the cells of a biological brain. Just like every neuron can be triggered by other neurons in a brain, AI works similarly through ANNs. Let’s know more about them.

Artificial Neural Networks – Borrowing from human anatomy

Popularly known as ANN, Artificial Neural Network is basically a computational system, which is inspired by the structure, learning ability, and processing power of a human brain.

ANNs are made of multiple nodes imitating the neurons of the human brain. These neurons are connected by links and also interact with each other. These nodes facilitate the input of data. The structure in ANN is impacted by the flow of information, which changes the neural networks based on the input and output.

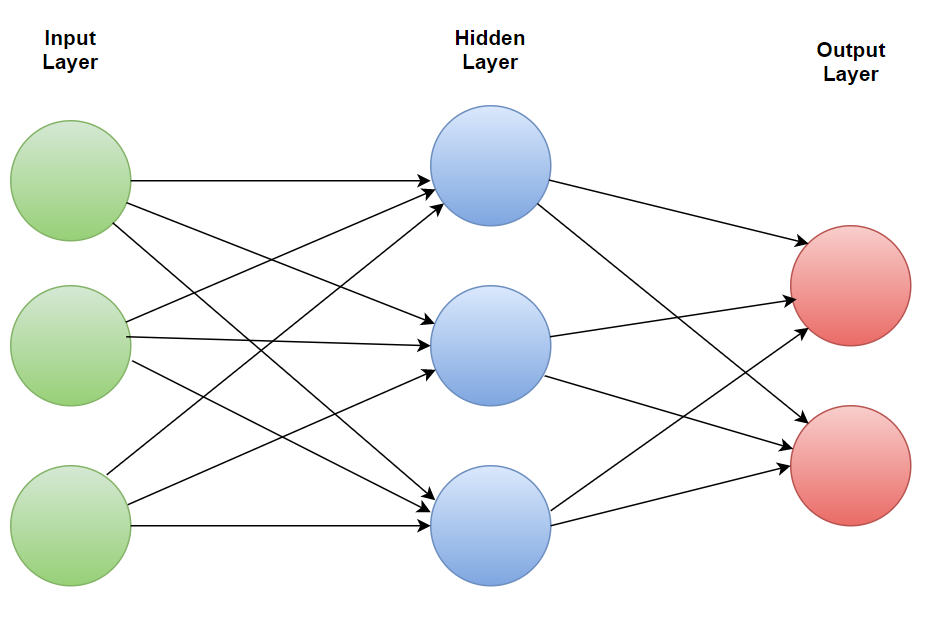

A simple, basic-level ANN is a “shallow” neural network that has typically only three layers of neurons, namely:

- Input Layer. It accepts the inputs in the model.

- Hidden Layer.

- Output Layer. It generates predictions.

Why use Artificial Neural Networks?

ANNs have numerous advantages over other forms. Some of them being:

- Massive Parallelism

ANNs can use a huge number of processors to do many computations at the same time (in parallel).

- Distributed Representation

ANNs have many weighted connections between the elements. They allow distributed representation of knowledge over the connections.

- Learning Ability

ANNs can acquire knowledge through a learning process, similar to the human brain.

- Generalization Ability

ANNs make it possible to handle unseen data. This allows for generalization. Its extent though is determined by the system complexity and training.

- Fault Tolerance

ANNs are very simple neuron-like processing elements, but due to their presence in huge quantity, the models become self-correcting, at least to a certain degree.

Two types of ANNs

Artificial Neural Networks are typical of two types – FeedForward, and FeedBack.

FeedForward ANN is one in which the flow of information is unidirectional, i.e. one unit sends information to the other, and doesn’t receive any information in return. So, no feedback loops are available in this network. They are used for pattern recognition and other such applications and contain fixed inputs and outputs.

FeedBack ANN allows feedback loops, and are used in a variety of stuff. They are used in CAMs – content-addressable memories.

Learning in ANNs – How it happens?

Within artificial intelligence and machine learning, there are many types of learning strategies. Based on the type of network architectures, different learning algorithms can be used. A look at a few of them:

- Supervised Learning

In this learning type, the network is provided with a correct output for every input pattern. The weights are assigned and determined to give answers as close as possible.

- Unsupervised Learning

This learning doesn’t require exact answers to every input pattern in the training set. Correlation between patterns is organized and used to direct learning.

- Hybrid Learning

This type of learning combines both supervised and unsupervised form of learning – in a way that part of the weights is determined through supervised learning and others via unsupervised.

4 Major Issues AI Engineers face with ANNs

These challenges are not peculiar to only ANNs, but artificial intelligence and machine learning engineering as a whole:

- Generalization vs. Memorization

To facilitate and stimulate intelligent behavior, both memorization and generalization abilities are essential. ML and AI engineers often face the issue of whether to commit to memory or keep it open-ended to foster the ability to generalize and make predictions from data not known right now.

- How to choose the network size?

Determining the number of parameters in a neural network is another practical issue faced by engineers. It involves calculating the sizes of tensors (images) and parameters.

- How many training examples?

There can be no blanket answer to this, which makes it even more complicated. The number of training sets one should use depends on the complexity of the problem and the algorithm chosen by the AI programmer.

- When to stop training?

How much data is enough data for training? Determining if your model is ready is difficult in the making of intelligent models. This is why a model is evaluated on the holdout validation dataset.

ANN presents the latest trends and solutions for complex engineering problems, which are difficult to be solved via traditional methods. It’s vital for AI aspirants to gain expertise in Artificial Neural Networks Training.